Using OpenAI Assistants to automate everything

In November, during their DevDay, OpenAI announced the launch of the Assistants API, marking a minor revolution that enables developers to create products based on GPT-4 (among others) that closely resemble autonomous agents:

- Persistent conversation memory

- Data retrieval from documents

- Code interpreter

- External function calls

The integration of these features into the Assistants API significantly reduces the development needed to create an agent using OpenAI's LLM. Moreover, when using the Assistants API, OpenAI can combine these functionalities as needed to provide an answer.

This opens up numerous possibilities for natural language functionalities: analyzing data from a local database, searching through internal documentation, generating reports in CSV format, generating charts, creating Jira tickets, and more.

Persistent Conversation Memory

The Assistants API introduces a concept of conversation threads, similar to those you can directly use in ChatGPT.

When a new message is posted to a conversation thread, OpenAI will automatically take into account the conversation history of the thread.

Therefore, it's no longer necessary to store the history oneself and summarize it to ask a new question.

Data Retrieval

The Assistants API allows attaching files (PDF, CSV, etc., see full list):

- To an assistant, for use in all threads

- To a message in a thread

These files can be used to answer questions posed, for example, asking a question in natural language based on business or technical documentation, or to be used by the code execution feature, for instance, to generate a chart from a CSV.

Moreover, when the content of a file is used to generate a response, the response message will contain annotations referring to the used file and the location of the used text, thereby creating a link to the original file.

Hence, generating embeddings and storing data in a vector database is no longer necessary.

Code Interpreter

The Assistants API includes the ability to generate and execute Python code in a sandboxed environment.

This feature is essential for use cases such as:

- Generating files: CSV, PDF, etc.

- Analyzing files, CSVs for statistics, for example

- Creating charts

- Performing arithmetic or other calculations, for example, calculating the date corresponding to the first day of the previous month

Both the used code and the result are made available in the Assistants API.

Function Calls

This feature is arguably the most interesting and powerful of the Assistants API.

It allows providing an assistant with a list of functions, with their definitions in JSON format: expected parameters, format, etc. and their description (how to use them).

Thus, when a message is posted in a thread, the Assistants API will evaluate whether one or more functions can be used to provide a response.

For example, consider the JSON definition of a hypothetical function execute_sql_query:

{

"type": "object",

"required": [

"query"

],

"properties": {

"query": {

"type": "string",

"description": "The SQL query"

}

}

}

With a description, explaining the database schema (tables, columns, relations, etc.) in natural language or SQL.

When asking in a thread "How many orders were placed yesterday?", the Assistants API will eventually decide to use the available execute_sql_query function, expecting a SQL query as sole argument.

Hence, the LLM will generate the query based on the schema described in the function description, and will ask for the function to be called with the generated SQL query, for instance:

SELECT COUNT(*) AS order_count

FROM orders

WHERE created_at = CURDATE() - INTERVAL 1 DAY

The real power behing those functions, is that you (your code / application) will actually be executing the function with the handler of you choice, meaning:

- You have full control over what is requested

- You have full control over the response you will provide to the Assistants API

That's a key feature here: OpenAI Assistants API is completely unaware of how the functions are executed. The function definitions act as a contract between your code / application and the Assistants API.

Once your code / application has executed the function with the provided arguments, it will submit the output to the Assistants API, allowing it to continue processing, and choose how to proceed: it may call additional functions, use tools (i.e. code interpreter) or once all necessary information is gathered, generate an answer.

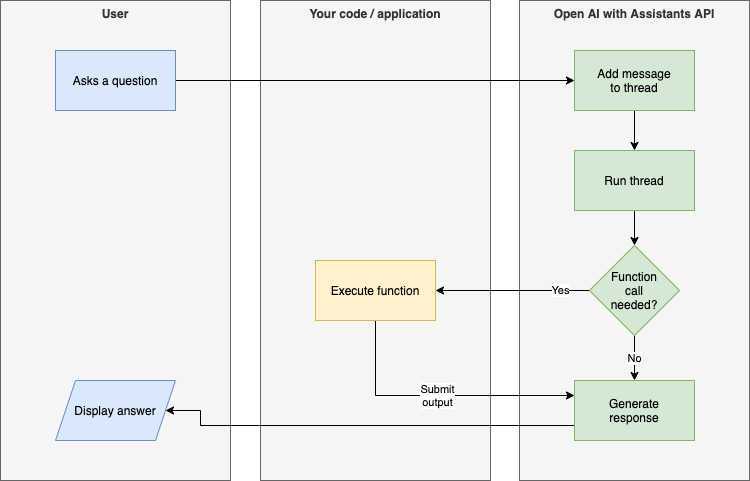

Here is an overview of how things work:

This diagram overly simplifies how the Assistants API works, and only convers the function tool, but it gives a quick overview of how everything relates.

Although function calling looks amazing, you should keep in mind that as of March 2024, GPT-4 models have a function calling accuracy of around 83%: this is the ability of a model to call the right function at the right time.

To learn more, you should read this tweet from @burkov and his paper.

___

Note that at the moment of writing this article (March 2024), the Assistants API still requires polling to get a thread and it's runs status.

Regarding the pricing model of Assistants API there is, in addition of GPT token usage cost, an extra charge per code interpreter session, and daily per GB of stored data used for retrievel.

Useful resources are available on OpenAI's website:

As a final word, I want to thanks the folks behind openai-php/client, who did a great work in implementing the whole Assistants API in their PHP client.

I'm Michael BOUVY, CTO and co-founder of Click&Mortar, a digital agency based in Paris, France, specialized in e-commerce.

Over the last years, I've worked as an Engineering Manager and CTO for brands like Zadig&Voltaire and Maisons du Monde.

With more than 10 years experience in e-commerce platforms, I'm always looking for new challenges, feel free to get in touch!